Jerry Kaplan: The Law of Artificial Intelligence

19 Feb 2015

Jerry Kaplan has been an interesting force in the Stanford AI community over the past couple years. He’s been a major player in Silicon Valley since the 80s, was one of the early pioneers of touch-sensitive tablets and online auctions, and wrote a book about his 1987 pen-based operating system company (which was ahead of its time, unfortunately for the company). Recently, however, he seems to have a new mission of fostering a broader discussion of the history and philosophy of AI, especially on the Stanford campus. He has been giving a number of talks on these topics, and taught a class that brought in other AI speakers.

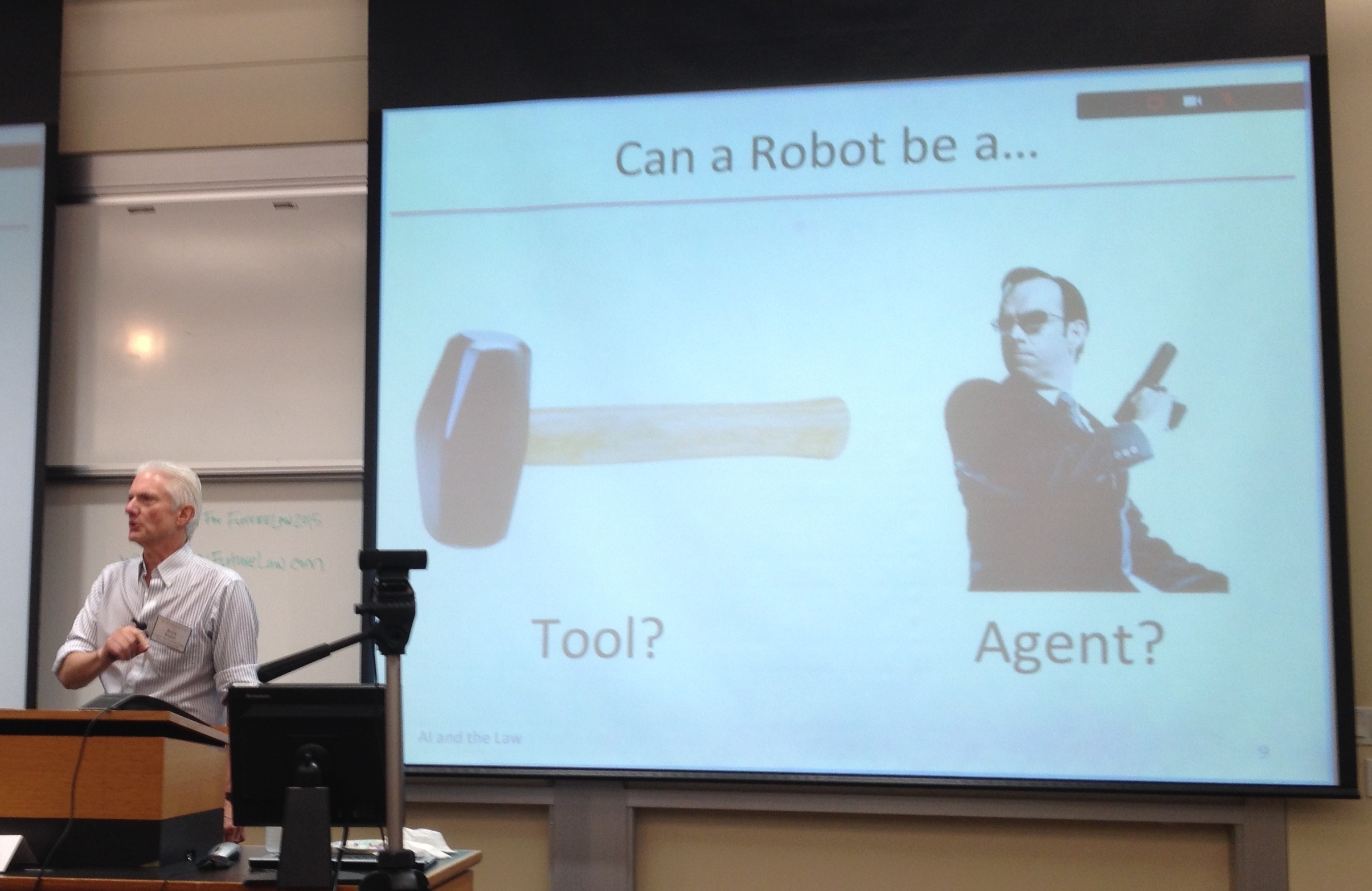

His most recent talk, through the Stanford CodeX center, was partially a plug for his upcoming book, “Humans Need Not Apply: A Guide to Wealth and Work in the Age of Artificial Intelligence” but focused specifically on how the legal system should handle the rise of autonomous systems. He draws a distinction between a system being “conscious” (which is far off for machines, and more of a philosophical question) and a system being a “moral agent” that is legally considered as an “artificial person.” Arguably corporations already fall under this definition, since they have rights (e.g. free speech) and can be charged with legal action independently from their employees (e.g. in the BP Deepwater Horizon spill).

Can an AI be a moral agent? Jerry argues that systems like autonomous cars are able to predict the consequences of their actions and are in control of those actions (without requiring human approval), so they meet at least a basic definition of moral agency. As has been discussed by others, self-driving cars will have to make decisions analogous to the philosophical “trolley problem,” in which every possible action results in harm to humans and value judgments must be made. Since AIs can (implicitly or explicitly) have some encoded moral system, they should bear some responsibility for their actions.

He proposed a few ways of actually enforcing this in practice. The simplest would be some type of licensing system, in which every AI system meeting some threshold of complexity would need to be registered with the government. If an AI is misbehaving in some way, such as a driverless taxi driving recklessly fast in hopes of a better tip, then its license would be revoked. The AI might just be destroyed, or if we think that the problem is fixable then it could be “rehabilitated” e.g. with new training data. There are many possible complications here (I asked him about how this would work if the AI is partially distributed in the cloud), but this general approach makes sense.

I’m happy that we’re handing over more and more control to AIs, freeing up humans from mundane tasks and probably outperforming them in many areas (though this will require some restructuring of the economy, which is a topic for another post). There are, however, some very practical problems that we need to address sooner than later - I’m glad to see that technically-minded people like Jerry are diving into these issues.

Comments? Complaints? Contact me @ChrisBaldassano