Live-blogging SfN 2017

16 Nov 2017[I wrote these posts during the Society for Neuroscience 2017 meeting, as one of the Official Annual Meeting Bloggers. These blog posts originally appeared on SfN’s Neuronline platform.]

SuperEEG: ECoG data breaks free from electrodes

The “gold standard” for measuring neural activity in human brains is ECoG (electrocorticography), using electrodes implanted directly onto the surface of the brain. Unlike methods that measure blood oxygenation (which have poor temporal resolution) or that measure signals on the scalp (which have poor spatial resolution), ECoG data has both high spatial and temporal precision. Most of the ECoG data that has been collected comes from patients who are being treated for epileptic seizures and have had electrodes implanted in order to determine where the seizures are starting.

The big problem with ECoG data, however, is that each patient typically only has about 150 implanted electrodes, meaning that we can only measure brain activity in 150 spots (compared to about 100,000 spots for functional MRI). It would seem like there is no way around this - if you don’t measure activity from some part of the brain, then you can’t know anything about what is happening there, right?

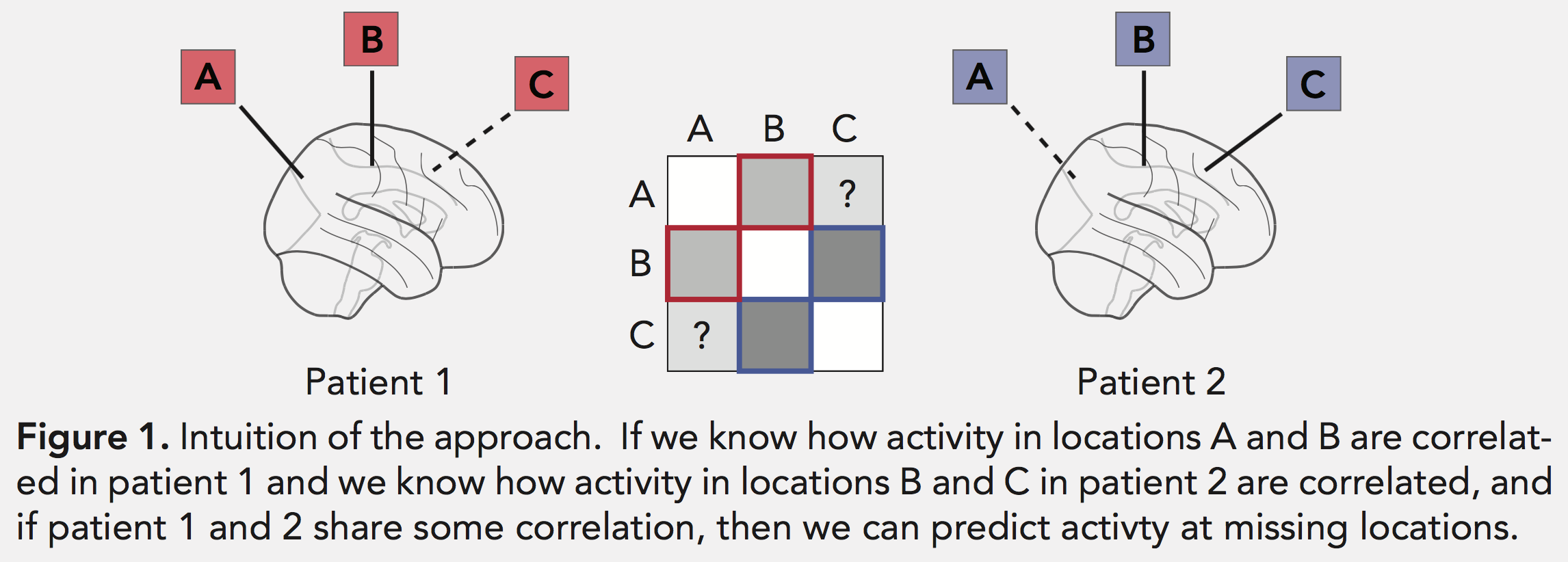

Actually, you can, or at least you can guess! Lucy Owen, Andrew Heusser, and Jeremy Manning have developed a new analysis tool called SuperEEG, based on the idea that measuring from one region of the brain can actually tell you a lot about another unmeasured region, if the two regions are highly correlated (or anti-correlated). By using many ECoG subjects to learn the correlation structure of the brain, we can extrapolate from measurements in a small set of electrodes to estimate neural activity across the whole brain.

Figure from their SfN poster

Figure from their SfN poster

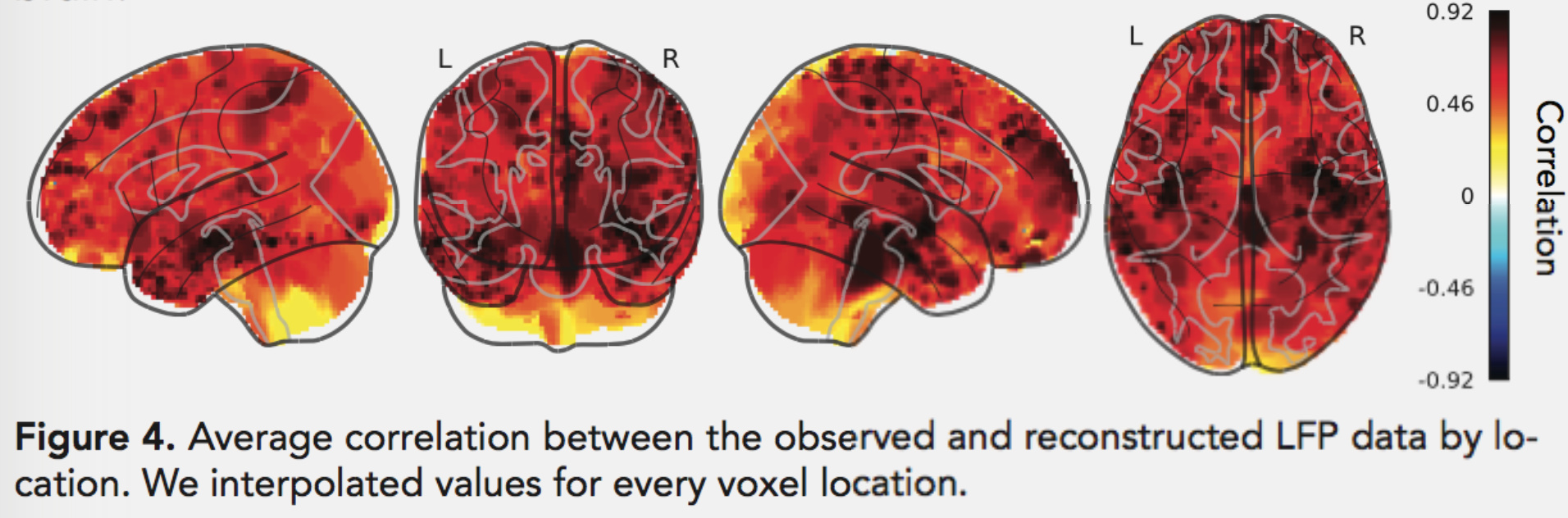

This breaks ECoG data free from little islands of electrodes and allows us to carry out analyses across the brain. Not all brain regions can be well-estimated using this method (due to the typical placement locations of the electrodes and the correlation structure of brain activity), but it works surprisingly well for most of the cortex:

This could also help with the original medical purpose of implanting these electrodes, by allowing doctors to track seizure activity in 3D as it spreads through the brain. It could even be used to help surgeons choose the locations where electrodes should be placed in new patients, to make sure that seizures can be tracked as broadly and accurately as possible.

Hippocampal subregions growing old together

To understand and remember our experiences, we need to think both big and small. We need to keep track of our spatial location at broad levels (“what town am I in?”) all the way down to precise levels (“what part of the room am I in?”). We need to keep track of time on scales from years to fractions of a second. We need to access our memories at both a coarse grain (“what do I usually bring to the beach?”) and a fine grain (“remember that time I forgot the sunscreen?”).

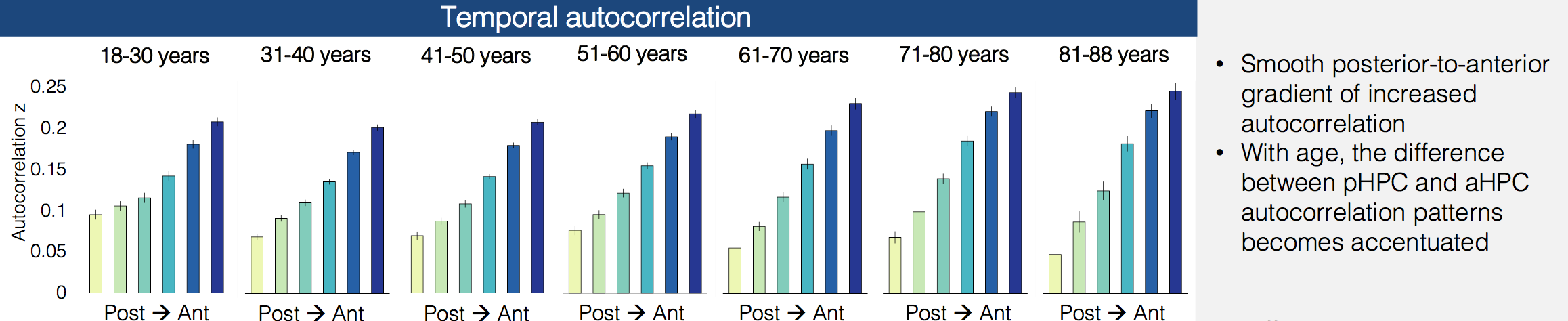

Data from both rodents and humans has suggested that different parts of the hippocampus keep track of different levels of granularity, with posterior hippocampus focusing on the fine details and anterior hippocampus seeing the bigger picture. Iva Brunec and her co-authors recently posted a preprint showing that temporal and spatial correlations change along the long axis of the hippocampus - in anterior hippocampus all the voxels are similar to each other and change slowly over time, while in posterior hippocampus the voxels are more distinct from each other and change more quickly over time.

In their latest work, they look at how these functional properties of the hippocampus change over the course of our lives. Surprisingly, this anterior-posterior distinction actually increases with age, becoming the most dramatic in the oldest subjects in their sample.

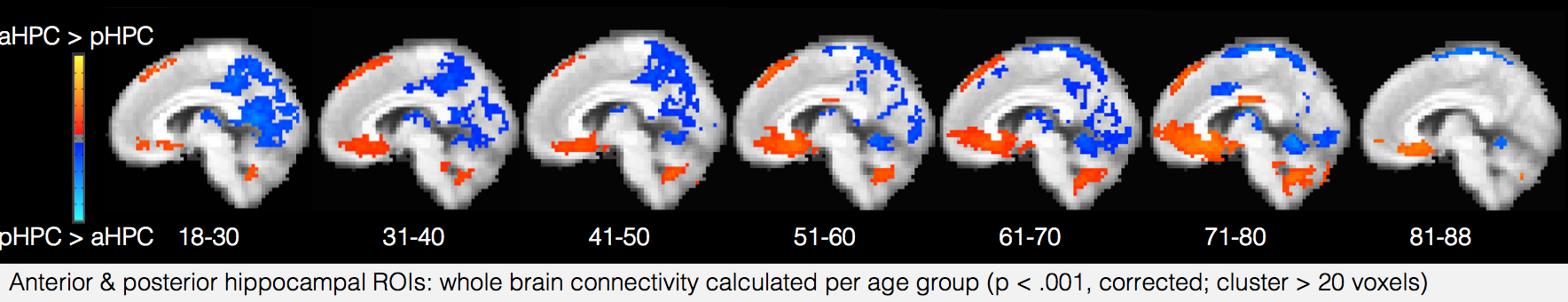

The interaction between the two halves of the hippocampus also changes - while in young adults activity timecourses in the posterior and anterior hippocampus are uncorrelated, they start to become anti-correlated in older adults, perhaps suggesting that the complementary relationship between the two regions has started to break down. Also, their functional connectivity with the rest of the brain shifts over time, with posterior hippocampus decoupling from posterior medial regions and anterior hippocampus increasing its coupling to medial prefrontal regions.

The interaction between the two halves of the hippocampus also changes - while in young adults activity timecourses in the posterior and anterior hippocampus are uncorrelated, they start to become anti-correlated in older adults, perhaps suggesting that the complementary relationship between the two regions has started to break down. Also, their functional connectivity with the rest of the brain shifts over time, with posterior hippocampus decoupling from posterior medial regions and anterior hippocampus increasing its coupling to medial prefrontal regions.

These results raise a number of intriguing questions about the cause of these shifts, and their impacts on cognition and memory throughout the lifespan. Is this shift toward greater coupling with regions that represent coarse-grained schematic information compensating for degeneration in regions that represent details? What is the “best” balance between coarse- and fine-timescale information for processing complex stimuli like movies and narratives, and at what age is it achieved? How do these regions mature before age 18, and how do their developmental trajectories vary across people? By following the analysis approach of Iva and her colleagues on new datasets, we should hopefully be able to answer many of these questions in future studies.

These results raise a number of intriguing questions about the cause of these shifts, and their impacts on cognition and memory throughout the lifespan. Is this shift toward greater coupling with regions that represent coarse-grained schematic information compensating for degeneration in regions that represent details? What is the “best” balance between coarse- and fine-timescale information for processing complex stimuli like movies and narratives, and at what age is it achieved? How do these regions mature before age 18, and how do their developmental trajectories vary across people? By following the analysis approach of Iva and her colleagues on new datasets, we should hopefully be able to answer many of these questions in future studies.

The Science of Scientific Bias

This year’s David Kopf lecture on Neuroethics was given by Dr. Jo Handelsman, entitled “The Fallacy of Fairness: Diversity in Academic Science”. Dr. Handelsman is a microbiologist who recently spent three years as the Associate Director for Science at the White House Office of Science and Technology Policy, and has also led some of the most well-known studies of gender bias in science.

She began her talk by pointing out that increasing diversity in science is not only a moral obligation, but also has major potential benefits for scientific discovery. Diverse groups have been shown to produce more effective, innovative, and well-reasoned solutions to complex problems. I think this is especially true in psychology - if we are trying to create theories of how all humans think and act, we shouldn’t be building teams composed of a thin slice of humanity.

Almost all scientists agree in principle that we should not be discriminating based on race or gender. However, the process of recruiting, mentoring, hiring, and promotion relies heavily on “gut feelings” and subtle social cues, which are highly susceptible to implicit bias. Dr. Handelsman covered a wide array of studies over the past several decades, ranging from observational analyses to randomized controlled trials of scientists making hiring decisions. I’ll just mention two of the studies she described which I found the most interesting:

-

How it is possible that people can be making biased decisions, but still think they were objective when they reflect on those decisions? A fascinating study by Uhlmann & Cohen showed that subjects rationalized biased hiring decisions after the fact by redefining their evaluation criteria. For example, when choosing whether to hire a male candidate or a female candidate, who both had (randomized) positive and negative aspects to their resumes, the subjects would decide that the positive aspects of the male candidate were the most important for the job and that he therefore deserved the position. This is interestingly similar to the way that p-hacking distorts scientific results, and the solution to the problem may be the same. Just as pre-registration forces scientists to define their analyses ahead of time, Uhlmann & Cohen showed that forcing subjects to commit to their importance criteria before seeing the applications eliminated the hiring bias.

-

Even relatively simple training exercises can be effective in making people more aware of implicit bias. Dr. Handelsman and her colleagues created a set of short videos called VIDS (Video Interventions for Diversity in STEM), consisting of narrative films illustrating issues that have been studied in the implicit bias literature, along with expert videos describing the findings of these studies. They then ran multiple experiments showing that these videos were effective at educating viewers, and made them more likely to notice biased behavior. I plan on making these videos required viewing in my lab, and would encourage everyone working in STEM to watch them as well (the narrative videos are only 30 minutes total).

Drawing out visual memories

If you close your eyes and try to remember something you saw earlier today, what exactly do you see? Can you visualize the right things in the right places? Are there certain key objects that stand out the most? Are you misremembering things that weren’t really there?

Visual memory for natural images has typically been studied with recognition experiments, in which subjects have to recognize whether an image is one they have seen before or not. But recognition is quite different from freely recalling a memory (without being shown it again), and can involve different neural mechanisms. How can we study visual recall, testing whether the mental images people are recalling are correct?

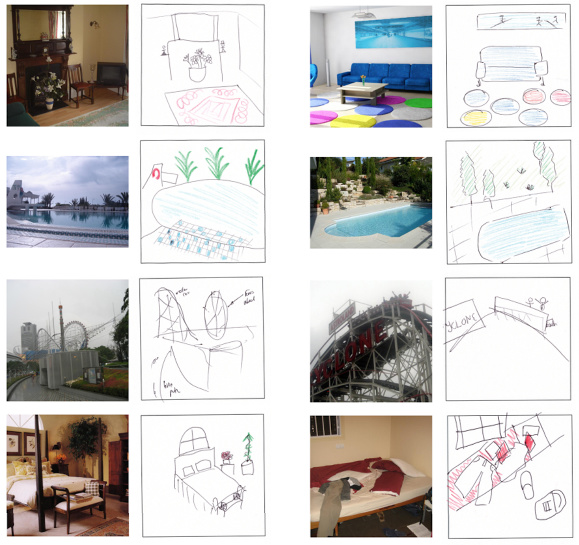

One way option is to have subjects give verbal descriptions of what they remember, but this might not capture all the details of their mental representation, such as the precise relationships between the objects or whether their imagined viewpoint of the scene is correct. Instead, NIMH researchers Elizabeth Hall, Wilma Bainbridge, and Chris Baker had subjects draw photographs from memory, and then analyzed the contents of those drawings.

This is a creative but challenging approach, since it requires quantitatively characterizing how well the drawings (all 1,728!) match the original photographs. They crowdsource this task using Amazon Mechanical Turk, getting high-quality ratings that include: how well can the original photograph be identified based on the drawing, what objects were correctly drawn, what objects were falsely remembered as being in the image, and how close the objects were to their correct locations. There are also “control” drawings made by subjects with full information (that get to look at the image while they draw) or minimal information (just a category label) that were rated for comparison.

The punchline is that subjects can remember many of the images, and produce surprisingly detailed drawings that are quite similar to those drawn by the control group that could look at the pictures. They reproduce the majority of the objects, place them in roughly the correct locations, and draw very few incorrect objects, making it very easy to match the drawings with the original photographs. The only systematic distortion is that the drawings depicted the scenes as being slightly farther away than they actually were, which nicely replicates previous results on boundary extension.

This is a neat task that subjects are remarkably good at (which is not always the case in memory experiments!), and could be a great tool for investigating the neural mechanisms of naturalistic perception and memory. Another intriguing SfN presentation showed that is possible to have subjects draw while in an fMRI scanner, allowing this paradigm to be used in neuroimaging experiments. I wonder if this approach could also be extended into drawing comic strips of remembered events that unfold over time, or to illustrate mental images based on stories told through audio or text.

Comments? Complaints? Contact me @ChrisBaldassano